Top 19 Offline iPhone Games You Can Play Without Internet

Explore the 19 best free offline iPhone games of different genres, including action, strategy, endless, horror, and more.

Continue Reading

Artificial Intelligence felt like a big game-changer when we initially discovered that sending text-based prompts to chatbots could give us answers ranging from simple to highly technical. These tools could create summaries, write entire documents, emails, and more, using only text input.

Over time, users recognized the gap between a more accurate response and prompts that stood solely due to these tools not being able to integrate different types of documents, such as images, PDFs, and spreadsheets. This gap was filled by multimodal AI.

Multimodal AI refers to machine learning models that can understand and process multiple types of data simultaneously, such as text, images, audio, and video. For users, this was a sigh of relief as they can now upload multiple files at once and interact with the tool without spending extra time explaining every detail through text-based prompts only.

Multimodal AI has become an important part of various industries that need advanced data analysis for enhanced outcomes, like healthcare, finance, automotive, manufacturing, and more.

To measure the impact of multimodal AI in these industries, we can consider the latest numbers by Markets and Markets, according to which, the global multimodal AI market is expected to reach USD 4.5 billion by 2028.

Explore more about multimodal AI applications in different industry sectors, their benefits, and more.

Multimodal AI is an AI system that can process and integrate information from multiple modalities or types of data.

Unlike traditional AI systems, multimodal AI systems do not rely on a single source of data for responses; they process multiple sources of data at once, including texts, images, sounds, videos, and more to produce responses that are more concise and related.

The ability to integrate different data from various source types makes AI systems more efficient in addressing complex scenarios. The evolution of this artificial intelligence model came from the need to put up multi-sourced prompts. NLP models were used for textual prompts, and computer vision models were used for image-based prompts, but what if someone wants the AI model to analyze a picture? This is exactly where multimodal AI helps users put up any type of data together in a prompt, and it can easily analyze every type. One of the most notable multimodal AI examples is ChatGPT.

Various deep learning models back up multimodal AI, as each is designed to handle a specific type of data. For example, convolutional neural networks help in processing visual inputs such as images and videos. Convolutional neural networks process visual inputs such as images and video, recurrent neural networks manage sequential and time-based data, and transformer models handle complex language understanding.

Different techniques are used to connect these models; have a look at two examples:

All inputs are brought into a single shared understanding through which multimodal AI can make sense of complex information and deliver accurate, real-time results.

At a practical level, multimodal AI works by pulling information from different sources and using it together to decide what to do next.

Take autonomous driving as an example. The system looks at data from cameras, LIDAR, radar, and audio sensors at the same time. Each source adds a piece of the puzzle. Cameras show road signs and lanes, radar detects nearby objects, and audio helps identify sirens or horns. By combining all this information in real time, the vehicle can understand its surroundings and react quickly and safely.

This ability to process multiple inputs at once makes multimodal AI reliable in fast-changing and high-risk situations.

Multimodal AI is especially useful in industries where decisions depend on large amounts of data coming in quickly.

In healthcare, it brings together medical scans, patient history, and real-time vital signs to help doctors make better diagnoses and treatment decisions.

In manufacturing, it combines sensor data, machine images, and past maintenance records to spot problems early and prevent equipment breakdowns.

In retail, multimodal AI analyzes customer behavior, product images, and purchase history to personalize recommendations.

In security and surveillance, it uses video feeds, audio cues, and motion sensors to detect unusual activity and trigger alerts.

Teams with extensive expertise in AI, machine learning, and neural networks create and manage these systems, guaranteeing that the models function precisely and scale seamlessly as data volumes increase.

Multimodal AI is designed to understand and act on information from multiple types of data at the same time, such as text, images, audio, and sensor signals. Unlike traditional AI that focuses on a single data source, multimodal AI integrates these diverse inputs to produce smarter, more accurate decisions. Here’s a step-by-step look at how it works:

The first step involves gathering data from multiple sources. Text, images, videos, and audio are collected and structured so the system can process them efficiently. For text, words are broken into smaller units and converted into numerical representations. Images and videos are analyzed to detect patterns, objects, or movements, while audio signals are processed to capture meaningful sounds or speech. This ensures that all inputs are compatible with the AI model for further processing.

Once the data is prepared, the system aligns inputs across modalities to ensure they correspond to the same context. For example, video frames can be synchronized with audio or relevant text transcripts. This alignment allows the AI to understand relationships between different types of information, providing a unified perspective that is crucial for accurate interpretation.

After alignment, the system extracts the most important information, or features, from each data type. Text is analyzed to determine meaning and intent, images and videos are examined for objects and visual patterns, and audio is scanned for patterns like speech or alerts. Dimensionality reduction techniques are applied to remove unnecessary data, making processing faster and more efficient while retaining critical information.

This is the core strength of multimodal AI. Features from all data sources are combined into a single, unified representation. Fusion can happen early, by combining raw data, or late, by merging individual model outputs. Many systems use a hybrid approach, combining both strategies for more reliable and nuanced results. This integration enables the AI to make sense of complex situations by considering all inputs together.

The AI system is then trained using advanced deep learning architectures like transformers, recurrent neural networks (RNNs), or temporal convolutional networks (TCNs). During training, the system learns how to weigh inputs from different modalities appropriately, improving its ability to generalize across varied scenarios and deliver accurate predictions.

Once trained, multimodal AI can analyze new, unseen data and provide actionable insights. The model uses patterns learned during training to make decisions or predictions in real time. For instance, in healthcare, it can analyze patient records, medical scans, and doctors’ notes together to suggest diagnoses or treatment options, providing a holistic view of the situation.

Multimodal AI systems are continuously updated with new data to stay accurate and relevant. Reinforcement learning and feedback mechanisms allow the AI to adapt over time, improving performance and refining decision-making as it encounters more scenarios.

Multimodal AI is transforming the way businesses and organizations operate by integrating data from multiple sources, such as text, images, audio, and sensors, to make smarter decisions. Its ability to process complex, diverse information simultaneously makes it valuable in many sectors. Here are 12 industry-specific examples showing how it’s being applied today:

Multimodal AI can combine medical imaging, patient records, and biometric data, which helps doctors detect diseases more accurately and suggest personalized treatment plans.

Self-driving cars use cameras, LIDAR, radar, and audio sensors together to understand traffic, identify obstacles, and make real-time driving decisions.

Retailers can leverage multimodal AI to analyze purchase history, product images, and customer reviews, which helps them deliver highly personalized shopping experiences, increasing engagement and sales.

Sensors, machinery images, and historical performance data are combined to predict equipment failures before they happen, reducing downtime and maintenance costs.

Multimodal AI is helpful in threat detection as well. In this scenario, video footage, audio sensors, and motion detectors work together to identify unusual activities or potential security threats in real time.

Multimodal AI integrates transaction data, behavioral patterns, and text-based communication to detect anomalies and prevent fraudulent activity faster than traditional systems.

Satellite images, drone footage, and sensor data are analyzed together to monitor crop health, optimize irrigation, and predict yield, improving efficiency for farmers.

By combining student interactions, written responses, and speech or video input, multimodal AI can create personalized learning paths and detect areas where learners need extra help.

Text, audio, and visual content are analyzed to recommend videos, music, or articles tailored to user preferences, increasing engagement on streaming platforms.

AI chatbots and virtual assistants use text, voice, and visual inputs from customers to understand issues more accurately and provide faster, context-aware solutions.

By analyzing sensor data, shipment images, and tracking information, multimodal AI helps companies manage inventory, reduce delays, and optimize delivery routes.

Data from sensors, weather forecasts, and consumption patterns are combined to predict demand, detect faults, and optimize energy distribution efficiently.

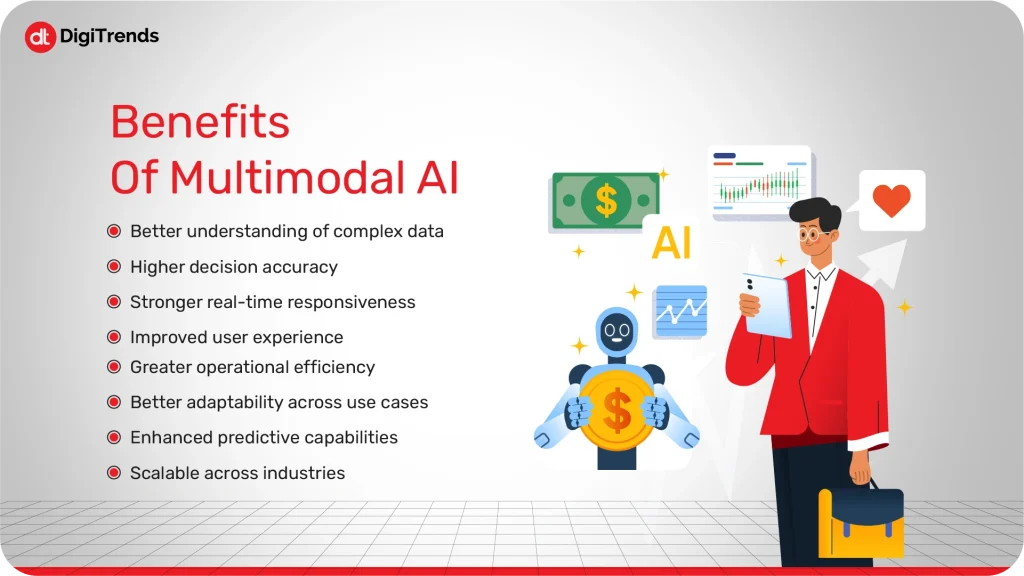

Multimodal AI brings together multiple types of data, text, images, audio, and sensors to deliver smarter, faster, and more reliable insights. Here are the key benefits it offers:

By analyzing multiple data sources simultaneously, multimodal AI gains a more complete view of a situation. This holistic understanding helps systems detect patterns and relationships that single-modality AI might miss.

Cross-checking information from different inputs reduces errors and improves confidence in predictions. The system can weigh each data source appropriately, leading to more precise and reliable outcomes.

Multimodal AI can process diverse inputs simultaneously, making it ideal for environments where fast decisions matter, like autonomous vehicles, security monitoring, or financial trading.

By combining text, visuals, and audio, multimodal AI can interact with users more naturally and intuitively. This results in smoother interfaces, better recommendations, and more personalized experiences.

Automating analysis across multiple data streams reduces manual work, speeds up processes, and lowers operational costs, freeing teams to focus on higher-value tasks.

Because it can handle different types of data, multimodal AI works in dynamic environments where conditions and inputs change frequently. This flexibility makes it suitable for multiple industries.

Combining historical and real-time data allows multimodal AI to make accurate forecasts, detect early signs of problems, and support proactive decision-making.

From healthcare and manufacturing to retail and energy, multimodal AI can be adapted to different sectors without building entirely new systems, making it a versatile tool for large-scale adoption.

Multimodal AI is no longer just a concept; it’s powering real products and applications that combine text, images, audio, and other inputs to deliver smarter and more interactive experiences. Here are 10 notable examples:

GPT-4 can process both text and images, allowing users to ask questions about photos, interpret diagrams, or combine visual and textual context for complex problem-solving. This makes it ideal for education, content creation, and research applications.

Google Lens uses images, text, and sometimes audio cues to provide instant information about the environment. Users can point their camera at an object, sign, or document, and the system can recognize it, translate text, or suggest actions based on visual context.

Microsoft Copilot in office integration combines document text, tables, charts, and user inputs to generate summaries, draft emails, or create reports. Analyzing multiple data types at once, it helps professionals save time and make more accurate decisions.

Clara is a healthcare AI platform that merges medical imaging, electronic health records, and genomic data. It assists radiologists and clinicians by providing enhanced diagnostic suggestions and supporting personalized treatment plans.

Alexa combines voice input with contextual information like user preferences, past commands, and connected device data. This allows it to deliver personalized recommendations, control smart home devices, and respond accurately to complex queries.

Watson Discovery integrates text, images, and structured data to provide insights for businesses. It can analyze documents, detect patterns, and surface relevant information for research, customer support, and decision-making processes.

Hugging Face provides AI models that handle both text and images, enabling tasks like generating image captions from text prompts, visual question answering, and multimodal sentiment analysis. These tools are widely used in research and product prototyping.

Adobe AI powers creative tools by combining visual, textual, and behavioral data. It assists designers with content recommendations, automated image editing, and personalized marketing campaigns, improving both creativity and efficiency.

TikTok leverages video, audio, and textual metadata to recommend content to users. By analyzing multiple modalities, it can predict user preferences with high accuracy, keeping engagement high and content discovery seamless.

Affectiva uses video, audio, and physiological signals to understand human emotions. It finds applications in automotive safety, market research, and UX testing by analyzing how users react in real time to stimuli.

DigiTrends offers tailored AI development services that help businesses unlock real value from data. Our team builds custom multimodal AI solutions that integrate text, images, audio, and sensor inputs into a single intelligent system. Whether you need an AI assistant that understands customer queries across formats, a predictive maintenance tool for industrial equipment, or a decision-support platform for healthcare, DigiTrends designs and deploys models that fit real-world needs.

They handle the full lifecycle, from data strategy and model training to deployment and ongoing optimization, so companies can adopt AI without the usual technical friction. With a focus on performance, scalability, and practical impact, DigiTrends helps organizations accelerate innovation and solve complex problems with confidence.

Multimodal AI is no longer just a futuristic concept; it’s reshaping how businesses, industries, and everyday applications process information. By combining text, images, audio, and sensor data, these systems provide a deeper understanding of complex scenarios, enable faster and more accurate decision-making, and deliver highly personalized experiences.

From healthcare and autonomous vehicles to retail, finance, and education, multimodal AI is proving its value across sectors, driving efficiency, innovation, and better outcomes. With platforms, applications, and solutions continuing to evolve, organizations that leverage multimodal AI gain a clear competitive advantage in handling complex data and making informed decisions in real time.

Whether it’s powering smarter apps, improving operational processes, or supporting critical decision-making, multimodal AI represents the next step in intelligent, context-aware technology, and companies like DigiTrends are helping make it practical and accessible for real-world impact.